A passive xWDM optical network design for DCs interconnect

Today, data centers make available impressive computing and storage capacity and, definitely, they can be considered as bank due to the fact they store one of the most valuable things for people: our data. In this article, we will analyze the problem of the “business continuity” and how this can be guaranteed through high-speed interconnections that allow a virtualization of resources and data of a data center..

Among the Over-The-Top (OTT), Google is surely one of the largest data center owners distributed worldwide. Only in Europe, they have 4 plants (Ireland, Finland, Holland and Belgium) to which will soon be added a fifth one by 2021 in Denmark with an investment of roughly 600 million of euros.

With over 175 zettabytes of data managed by 2025, data centers will continue to play a vital role in the acquisition, processing, storage and management of information

But what is a data center, how they are made, why they are so important and what is the reason for their high cost?

DATA CENTER STRUCTURE AND CLASSIFICATION

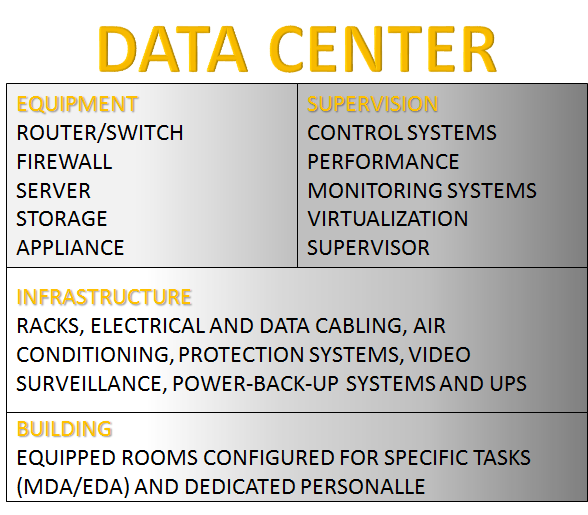

A data center is a place whose extension can start from some tens of square meters to several floors of a building or several buildings as well. It is a set of subsystems dedicated to different tasks like information handling, safety and security capabilities and a physical infrastructure (see figure 1)

All these things require an extremely complex engineering work that needs specific multi-disciplinary skills in order to ensure reliability, security and scalability over time.

For this reason, a reference standard has been created together with the best-practices of different entities. A well-known is the TIA-942 standard which sets the rules for data center design and specifies the requirements in terms of network architecture, electrical design, energy saving, system redundancy, risk control, environmental control and more.

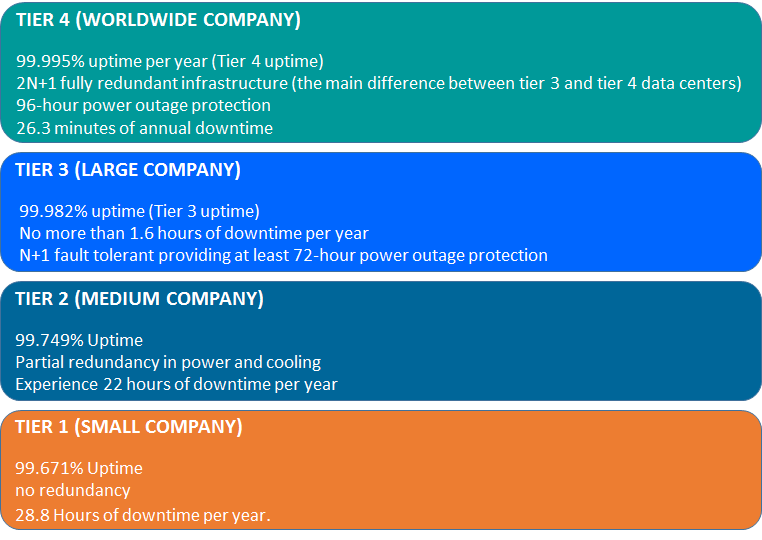

A compliance with these guidelines allows data center owners or operators to obtain certification from the Uptime Institute, an organization dedicated to evaluating the performance, efficiency and reliability of a data center’s infrastructure. Based on the criteria defined by the Uptime Institute, four levels (TIER) of data center reliability are defined as minutes or hours of downtime on an annual basis (Figure 2).

MAIN REASONS FOR DATA CENTER DOWNTIME

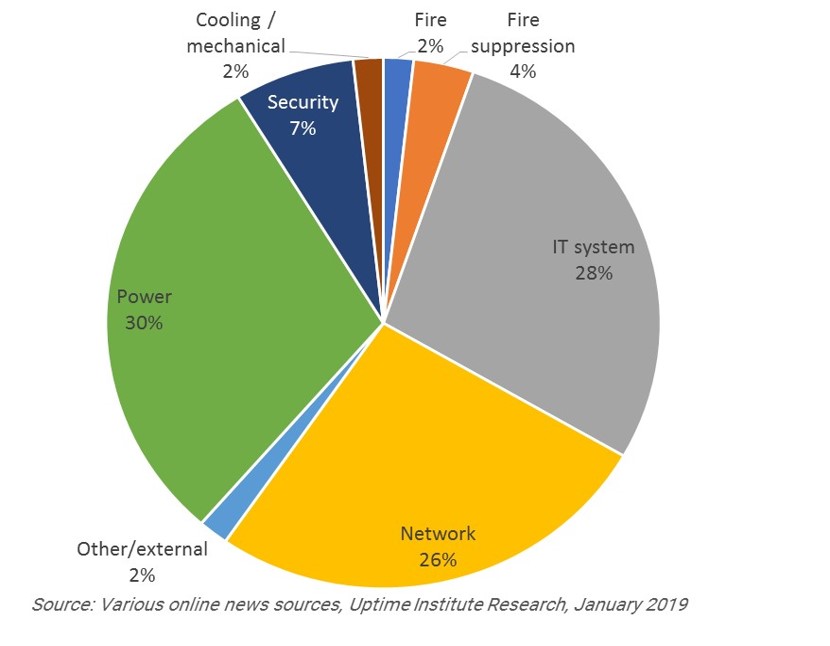

According to the Uptime Institute (survey 2018) power outage, network failure and software errors are the main reasons for data center shutdown (see fig.3).

A cost estimation related such event (i.e. data center unavailability) is not easy also because no info are available on that but the institute has estimated that, more than 30% of these occurrences, has generated losses in a range from 250 thousand dollars up to one million dollars.

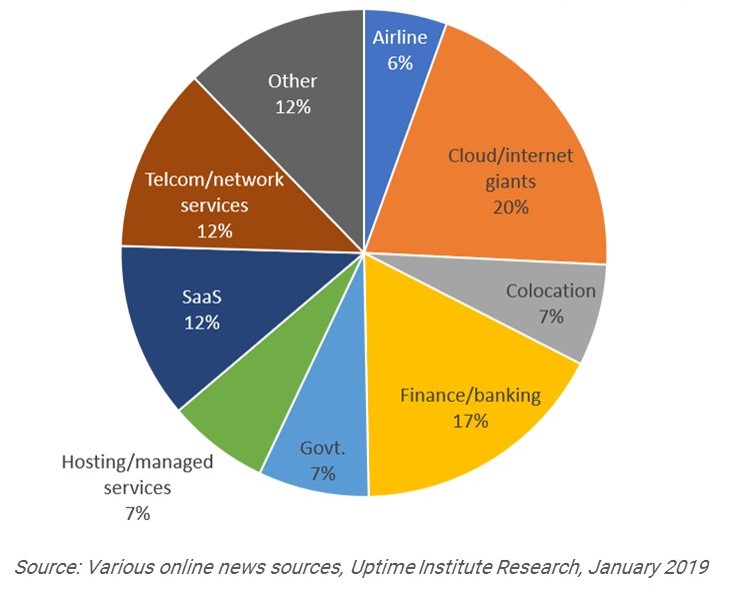

Looking to Fig 4, we can guess that not only the big players of Web are being affected by a “failure” or unavailability of their data centers, but also that the above losses calculated by institute are surely underestimated.

DATA CENTER VIRTUALIZATION

A possible approach to achieve a business continuity is based on resources virtualization. From this perspective, resources virtualization can not only optimize the power consumption and their provisioning using an intelligent supervisor layer but also prevent the effects of any fault occurring in a data center.

That it does not mean that virtualization is the “panacea” to any issue listed above for services interruption of data center so all the other best practices suggested in the design of a data center in terms of reliability cannot be set aside.

In practice, by building several data centers, suitably sized and located in different areas, it is possible to distribute the workloads by allocating the different service instances (such as access to your email for example) on physically distinct but logically connected data centers in order to share information and data.

This is possible thanks to physical interconnection via high-speed optical networks also known as Data Center Interconnect or DCI. Large organizations, such as banks or insurance groups, use one or more data centers connected by an optical public or private and realize their interconnection to other partners or service providers or public Internet within a DC domain of third party (like Equinix).

CONSTRAINS OF DCI NETWORK SCENARIO

The implementation of a DCI requires a careful assessment of several factors:

- Distance. Data centers to be connected can be spread over a larger or smaller area. This can affect latency.

- Capacity. The amount of data exchanged by the data centers requires huge line capacities that should be easily scalable. Depending on the case, bandwidth can scale from a several 10 Gbps links up to some interface with 100 Gbps optics.

- Security. Personal or business data rather than financial transactions require high security. The use of encryption ensures connections protection.

- Operation. Manual operations are laborious, complex, slow and extremely prone to errors. Reducing them is an operational imperative. Open APIs are critical to the transition to automation because they allow the scripting and needed customized applications.

- Cost. Efficiency in transporting large data streams is important to ensure that costs do not scale in the same way as the bandwidth does. Financial sustainability is highly dependent on the networking solutions used to reduce the cost per transmitted bit.

DCI SOLUTION BASED ON PASSIVE xWDM OPTICAL NETWORK

Considering all these topics, a DCI design can be implemented using a passive or active optical transport network solutions (OTN).

The suppliers of the active OTN strongly criticize the passive one by highlighting its management complexity, limited power budget and scalability, no chance to realize a performance monitoring.

On the other hand, what we can state is when the parameters listed above are not so critical, a passive OTN solution is extremely advantageous from an economic perspective and, if it is well designed, it can overcome some limits and be equally valid. In addition, a passive OTN solution does not consume energy, hardly breaks or degrades as active OTN solution or requires periodical maintenance.

Passive OTN does not require strong skills on optical technologies and this is a positive message for Service Provider with mainly a consolidated Ethernet/IP background.

Regarding its management, an optical network configuration is rarely modified so frequently and this is certainly true in DCI application.

Concerning to the scalability, if we adopted a passive OTN solution based on CWDM technology, a point-to-point connection could scale up to 160Gbps (or even more with DWDM) just adding new 10G interfaces on transponders or Ethernet switches.

Even if some L1 devices (i.e. transponder or muxponder) can support encryption at this level, they are much more expensive of an encryption solution based on Ethernet switches with MACSEC that can connect two endpoints at 60-80Km (the actual power budget of 10G SFP+ transceivers can easily reach such distances and with FEC they can exceed this limit).

Recently, some manufactures have made commercially available a compact EDFA amplifiers in C-band with the form factor of a SFP+/QSFP transceiver that can be installed inside the same switch and extend the reach.

Not only. The first 100G QSFP28 transceivers with a span of 80 Km are already available on the market, making possible a passive optical network design at this bitrate as well.

These are a few examples of technical solutions for a passive network design, less expensive than an active solution, without the “usual” limitations claimed by active OTN solutions fans.

What has been said above is not a pure theoretical exercise: a passive interconnection service between data centers is provided by Sipartech in Paris with a very interesting cost plan if compared to a multi-years leasing contract for a dark fiber.

This experience underlines the fact that a passive optical transport solutions, in DCI applications as well as in other network scenarios such as fixed and mobile backhaul/fronthaul applications, has a very interesting and extremely competitive market compared to active OTN solutions.

They also offer interesting opportunities to the Infrastructure Providers in the wholesale market by allowing the rental of a “logical infrastructure” based on wavelengths rather than physical fiber.

Do you want to learn more? Please contact us here

ABOUT OPNET

OPNET Solutions is a company based on highly skilled people with decades years of technical and managerial experience in ICT, Telco and Energy industries and technologies.

Our purpose is to provide, to big and small companies, high-end tools and resources to empower and grow their business.